Apologies in advance for the length. TL;DR: skip to the end to find a command-line with a particular parameter (in bold text) to increase your Java heap space allocated to JOSM upon its launch.

I thank @Graptemys for editing in OSM, and with JOSM, which those who use it know has a definite learning curve. While I’m at it, I thank everybody who edits in OSM, with whatever editor whatsoever!

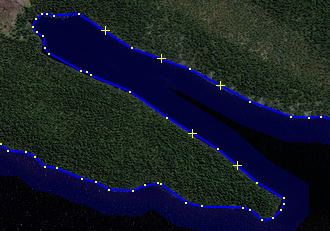

That said, I am also reluctant to see higher-resolution data become lower-resolution data, regardless of how “remote” it might be. (Earth is Earth is Earth, you are welcome quote me on that). There’s been a lot of good, quite technical advice offered here, including @amapanda_ᚐᚋᚐᚅᚇᚐ 's recent suggestion to use Wireframe View.

I’ve been editing massive (>100,000 node) objects in OSM for quite a while, including ginormous national forests about ten years ago, but I simply had to stop as it was crippling my machine. (A decent Mac at the time, I wouldn’t call it “big iron,” it was big enough for most things, but those forests were just too much). Of course, I’ve since upgraded, though for this scale of editing, 64 GB of RAM isn’t too much.

I did learn a few tricks with JOSM (it being a Java app), maybe they’ll work for you. Please try to increase the heap space you offer to the program at launch, and you might not be asking about “simplifying” OSM data (let’s call this what this is: dumbing down our data). I don’t want to dumb down for the convenience of editing, so get down to the command-line (a shell in Unix/Linux, a Terminal window in macOS, a CLI in Windows…) and see if you can launch JOSM like this (I’m on a Mac, hence the path/location you see here):

java -jar /Applications/josm-tested.jar

You really should be able to do this. Actually, I also add -Dsun.java2d.opengl=true and you might, too.

I’m talking about radically increasing JOSM’s heap space. If you have 32 GB (minimum for what I’m talking about) or 64 GB (or more) of RAM, this can seriously help. My usual JOSM startup command “beefs up” to 2 gigabytes of RAM (up from .5 GB in the old days, to 1 GB, now 2 GB):

java -Dsun.java2d.opengl=true -Xmx2048m -jar /Applications/josm-tested.jar

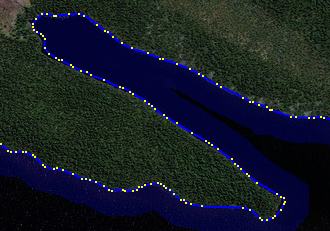

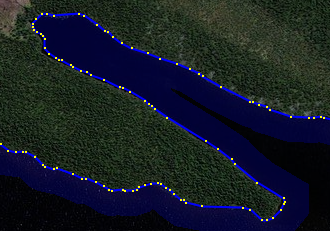

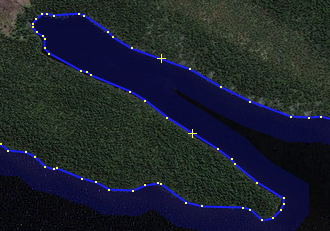

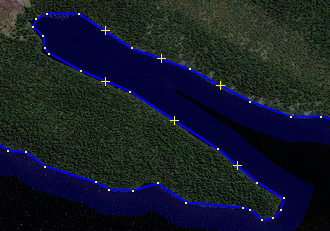

took a bit under 2 minutes (108 seconds) to open relation/6563858. But when I changed the -Xmx2048m (2 gigabytes of heap) command-line parameter to -Xmx8192 (8 gigabytes of heap), so, to be clear, the command-line I use to throw a pretty-large 8 gigabyte heap at JOSM is:

java -Dsun.java2d.opengl=true -Xmx8192m -jar /Applications/josm-tested.jar

JOSM opened this gigantic lake in 44 seconds, about a 60% savings (in time of loading and displaying).

While I’m all for “sane simplification” when warranted, let’s not (needlessly) simplify our data, let’s provide our tools the resources they need to do their job properly.

I hope this helps.