AFAIK especially Overpass has also a problem in cases where multiple

changes, from different changesets, were applied in the same second to a

given object.

The Overpass API works as intended here.

To get back to the geometry for problem. In absence of an explicit linking of versions, the ways and nodes effectively get linked via the timestamps.

In particular, this is a well-defined and well understandable concept. The geometry of a way at a given timestamp is then computed from the coordinates of the latest versions of the referenced nodes that were already in place at that timestamp.

In contrast, if you were to allow multiple different coordinates for nodes in the same second then you forego geometry of ways at all. An example:

<node id="1001" lat="50.1" lon="1.1"

version="1" timestamp="2024-03-01T07:00:00Z"/>

<node id="1001" lat="50.1" lon="1.2"

version="2" timestamp="2024-03-01T08:11:12Z"/>

<node id="1001" lat="50.1" lon="1.3"

version="3" timestamp="2024-03-01T08:11:12Z"/>

<node id="1002" lat="50.2" lon="1.1"

version="1" timestamp="2024-03-01T07:00:00Z"/>

<node id="1002" lat="50.2" lon="1.2"

version="2" timestamp="2024-03-01T08:11:12Z"/>

<node id="1002" lat="50.2" lon="1.3"

version="3" timestamp="2024-03-01T08:11:12Z"/>

<way id="100" version="1" timestamp="2024-03-01T07:00:00Z">

<nd ref="1001"/>

<nd ref="1002"/>

</way>

Now, if you would base geometry on node version, had the way at any point in time

- a geometry

(50.1 1.2) (50.2 1.3) based on applying 1001v2 then 1002v3 or

- a geometry

(50.1 1.3) (50.2 1.2) based on applying 1002v2 then 1001v3 or

- some random rules to skip some node versions, denying the existence of any of these two intermediate states?

OTOH changesets are on a semantic level a group of changes that the human user decided to be belonging together. The community more than once has even urged people to give a meaningful comment on every changeset.

How likely is it that a user is shaping two groups of changes in parallel and completing them at the same moment? The much more likely course of events is that the user has completed one changeset and the next one then seconds to minutes later, and only artifacts of the upload process might result in this situation. Or an editor that disregards the rules for good changesets.

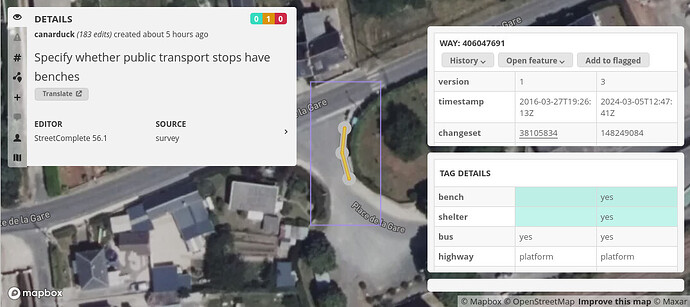

In fact, only StreetComplete exhibits that behaviour. In every other editor, the human users apparently work on one task after, properly comment the changesets, and have no need to upload multiple changesets in the same second.

There is zero priority to implement a StreetComplete changesets special mode in Overpass which, as a severe side effect, make it much harder to explain the data model to fellow mappers and data users for no real benefit.