Hi, we have tools to find broken urls (such as Keep Right), and tools to find broken wikimedia_commons=File:* links (such as Category:Commons pages with broken file links), but is there a way to check for wikimedia_commons=Category:* values that link to non-existent categories (so we can fix them)?

Are you looking for broken links on Wiki? Or in OSM data?

In OSM data. Let’s say someone made a typo, or the category has been deleted, is there a way to discover it and fix the value on OSM?

I don’t know of ant automated way, but you can use Overpass to find all objects and then write a script to check them:

[out:csv(::type, ::id, wikimedia_commons)][timeout:120];

(

node["wikimedia_commons"~"Category:"];

way["wikimedia_commons"~"Category:"];

relation["wikimedia_commons"~"Category:"];

);

out body;

And as a script something like this:

#!/usr/bin/env bash

while IFS='' read -r line; do

category=$(cut -f 3 <<<"${line}")

if [ "${category:0:1}" = '"' ]; then

category=$(jq -r . <<<"${category}")

fi

code=$(curl -s -o /dev/null -I -w "%{http_code}" "https://commons.wikimedia.org/wiki/${category}")

if [ "${code}" -eq 404 ]; then

echo "Broken: ${line}"

fi

done < "interpreter.csv"

Where interpreter.csv is your saved Overpass data. Not very efficient, but maybe worth a shot?

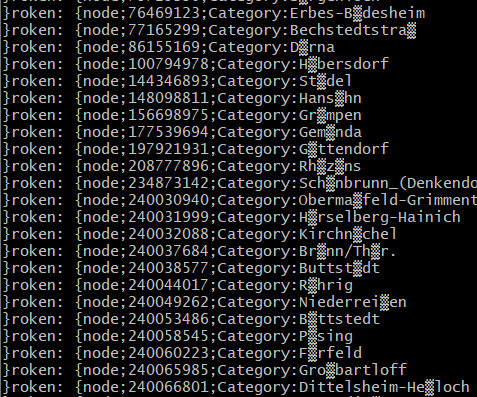

I’m not sure if I did something wrong, but seems like the script is giving me back valid categories that have umlaut letters, so most of deutsch categories:

Looks like you got semikolon-separted file, not tab. In that case cut -f 3 <<<"${line}" becomes cut -d ';' -f 3 <<<"${line}"

Getting all “wikimedia_commons=Category:* values” from openstreetmap using osmium and a .pbf file is a matter of:

osmium tags-filter area.osm.pbf -R nwr/wikimedia_commons=Category* -o commons_Category.opl

Then I typically use a python script to parse this output text file:

#!/usr/bin/python3

import re

categories = []

# https://osmcode.org/opl-file-format/#encoding

def unencode_opl_char(match):

""" Given match r'(%)([0-9A-Fa-f]+)(%) return the unicode char """

return chr(int(match.group(1), 16))

fn = 'commons_Category.opl'

with open(fn, 'r') as fh:

for line in fh:

# n1300148235 v3 dV c68983837 t2019-04-07T18:39:20Z i207581 uHjart Thistoric=memorial,name=Seemannsgrab,wikimedia_commons=Category:Seemannsgrab x9.6021629 y54.8759099

opl_match = re.search(r'[,T]wikimedia_commons=Category:([^ ,]+)' , line)

category = re.sub(r'%([0-9A-Fa-f]+)%', unencode_opl_char, opl_match.group(1))

if category not in categories:

categories.append(category)

print('Found', len(categories), 'categories')

For a planet file that currently gives back 41617 categories.

You should take care verifying all those without overloading the MediaWiki API

Downloading the all-titles dump first at dumps.wikimedia.org may be more efficient than many curls.

FYI: I wrote CommonsChecker4OSM to find broken wikimedia_commons links using osmium and the Wikimedia data dumps, and created MapRoulette challenges for Commons categories and files.