This check is now included in this file: https://github.com/watmildon/josm-validator-rules/blob/main/rules/USStreetName-AdditionalChecks.validator.mapcss

I know it sucks. Will fix am

Now it should be ready. This is the geojson that we intend to give out in tasking manager. I don’t expect to scrub it any more in QGIS unless absolutely necessary

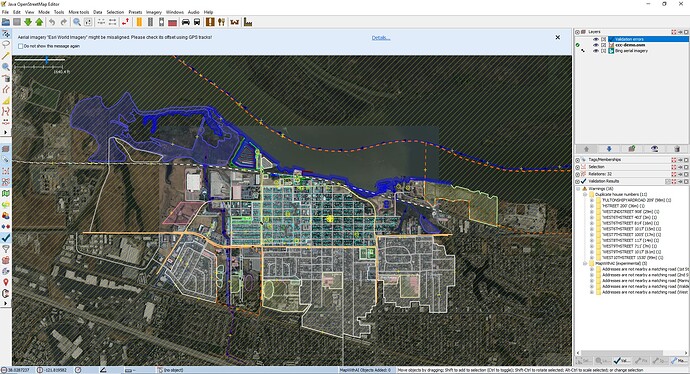

Without any manual interventions, this is how a decently complex neighborhood looks like:

16 warnings, that can easily be fixed by hand

https://git.zyphon.com/public/contra-costa-import/src/branch/main/ccc-demo.osm

Demo .osm file, no validation errors. This is how end data will go up to OSM

Here are some stats from your latest geojson file:

City, invalid capitalization 69

City, missing 108

Housenumber, missing 21

Housenumber, possible multiple values separated by ';' 598

Postcode, Invalid, must be exactly five numeric digits 5

Postcode, missing 1686

Street, PO Box not a valid street 7

Street, invalid capitalization 1960

Street, missing 7

Street, possible multiple ';' separated values 189

Street, repeated word 65

Street, unexpanded abbreviation at start 1

Unit, contains non printable characters 2

Unit, possible multiple ';' separated values 22

Duplicate addresses, total features 2935

Duplicate addresses, sets 1268

The data still has multiple values in tags separated by ‘;’, you can blame JOSM, but that is how the data is going to go into OSM. Again, I recommend producing a .osm file directly, and then you can move them apart spatially during the manual review. Decomposing the ‘;’ separated values will be difficult.

The data has 2,935 duplicate addresses in 1,268 sets.

Also, not shown above is that some abbreviations, such as “BL” (for Boulevard), and “STE” for Suite have not been expanded. The Boulevard should be caught be the validator, I am not sure about the Suite

Many of the cities are misspelled, e.g. all but one of these must be wrong:

Cocnord

Comcord

Concocrd

Concod

Concord

CONCORD

concord

cONCORD

Concord;San Pablo

Conocord

Conocrd

Conord

Cooncord

I suspect that there are more errors that I haven’t had time to document.

This file is not ready for import.

“STE” for Suite

Goes in Unit, should not be extended.

This file is not ready for import.

As shown above, it can be fixed by minimal manual edits, so when split into manageable chunks, it is ready to produce valuable data.

Also, please feel free to fix errors in the geojson, and document how you did it so we can add it to the readme for future imports

Housenumber, possible multiple values separated by ';' 8

Postcode, missing 2

Just because there are no validator errors, doesn’t mean the data is good to go.

How are you finding these? Please document your process

It should be expanded, but in the off case that it should not, then the data has cases where it has been expanded.

The ccc-demo.osm still had errors after manual review.

You should be easily able to fix a lot of these issues, such as changing CONCORD to Concord. If you have specific questions, I can try to answer those.

You should be easily able to fix a lot of these issues, such as changing CONCORD to Concord. If you have specific questions, I can try to answer those.

Yes, I can manually fix it. If you have a QGIS workflow that can do it, please share it with us. We need to have the import workflow reproducible so that when an update comes out, we can fast-track processing.

The ccc-demo.osm still had errors after manual review.

Please share the tools you used to find these, as I can not find them

It is a bit of a mess, but here is what I have currently:

It outputs each address that has a possible issue, and then lists the issues.

At the end of the output it prints a summary of all of the cities, streets, etc. that were found, this is an easy way to skim over the data to look for additional interesting things

It also prints a list of duplicate addresses, including how many addresses had that value.

Finally, at the end, it prints a summary of the findings.

In addition to the Python code I sent (sorry, it is a mess), you can use the JOSM search function, e.g.

“addr:housenumber”~“^.;.”

finds any addr:housenumber that contains a ‘;’

JOSM search to find missing addr:postcode:

(-"addr:postcode":) type:node new

: means select if the tag exists on a feature

- negates that, so now we are searching for cases where there is not a postcode

type:node means only look at nodes

new means only look at new elements (not data that was downloaded from OSM)

@tekim I’m having trouble detecting the nonprintable characters (duh)

How did you find them?

The Python program I sent a link to finds them, but you can also find them with the JOSM search function:

"addr:unit"~"^.*[^ -~].*$"

~ is the regular expression operator

[^ -~] Finds any character that is not between a space and a tilda (the ascii printable characters) ^ inside [] means negage the character in the [].

so the complete expression means find the start of the string(^), followed by zero or more of any character(.*), followed by a non printable character([^ -~]), followed by zero or more of any character(.*), followed by the end of the string($).

Also, @watmildon added this to their custom validator.

Here is what I did for Virginia. Your case is different, but it will give you some idea.

Virginia data was generally of higher quality, but its organization was more complex (address broken into a number of different fields).

The qc script is now here:

To run it you will need to download a database of zip codes from

https://www.unitedstateszipcodes.org/zip-code-database/

(there is a free option)

There are still some things that @watmildon 's validator check for that I do not, such as whether all abbreviations have been expanded. If I get a chance, I will work on that, but since @watmildon has it covered, I didn’t think it was urgent.

The zip codes are interesting. There are a huge number of addresses that have zip codes that the USPS claims are for PO Boxes only. These may or may not be false positives. I have to imagine that most of the addresses in these data receive mail via USPS, so must have an address that is valid per USPS, and the ones that have a “PO BOX only” zip code, are not valid per the USPS website (at least the ones that I checked).

@Frigyes06 You asked about how I find issues. Another thing that one can do: In JOSM, search for all of the addresses that have a certain zip code, and then look for the ones that are isolated from the rest. These are probably errors.

I did take a look at CCC’s parcel data, and in many ways it is better, but in many ways it is worse. For example, it does not always have addresses for individual apartment units. Nevertheless, it could be a good reference as you work through the data manually.

As always, OSM: an enormous data regularization and validation project.